Foundations of Data Engineering: What is Data Engineering? A Non-Buzzword Guide

A clear, jargon-free introduction to what data engineering truly is, why it matters, and how it powers modern AI and Analytics.

The Invisible Engine Behind AI & Analytics

Every second, an unimaginable flood of data is created—tweets flying across the internet, credit card transactions being processed, medical records updated, videos streamed, sensors measuring temperatures, and millions of people clicking, scrolling, and searching. Data is everywhere, but raw data on its own is messy, chaotic, and, quite frankly, useless.

Before it can power AI, fuel analytics dashboards, or personalize your Netflix recommendations, it needs structure. It needs to be collected, cleaned, and stored in a way that makes sense.

That’s where Data Engineering comes in.

Think of it as the behind-the-scenes crew of a blockbuster movie. You rarely see them, but without their work—setting up cameras, managing lighting, editing scenes—the final product would be a disaster. AI and analytics are the stars of the show, but data engineering is the team making sure everything runs smoothly behind the curtain.

But what exactly is data engineering? Is it just another fancy tech term? Nope. Let’s cut through the noise and get to the core of it.

The Core of Data Engineering – No Buzzwords, Just Clarity

Imagine a bustling city. Roads, highways, and bridges connect every district, ensuring smooth transportation. Now, think of data engineering as the infrastructure of the data world—it ensures that information flows seamlessly from where it’s generated to where it’s needed.

Without well-planned roads, a city plunges into chaos—traffic jams, detours, and inefficiencies everywhere. Likewise, without proper data engineering, businesses drown in a sea of unstructured, unreliable data, unable to extract anything meaningful from it.

But what exactly does a Data Engineer do?

What is Data Engineering?

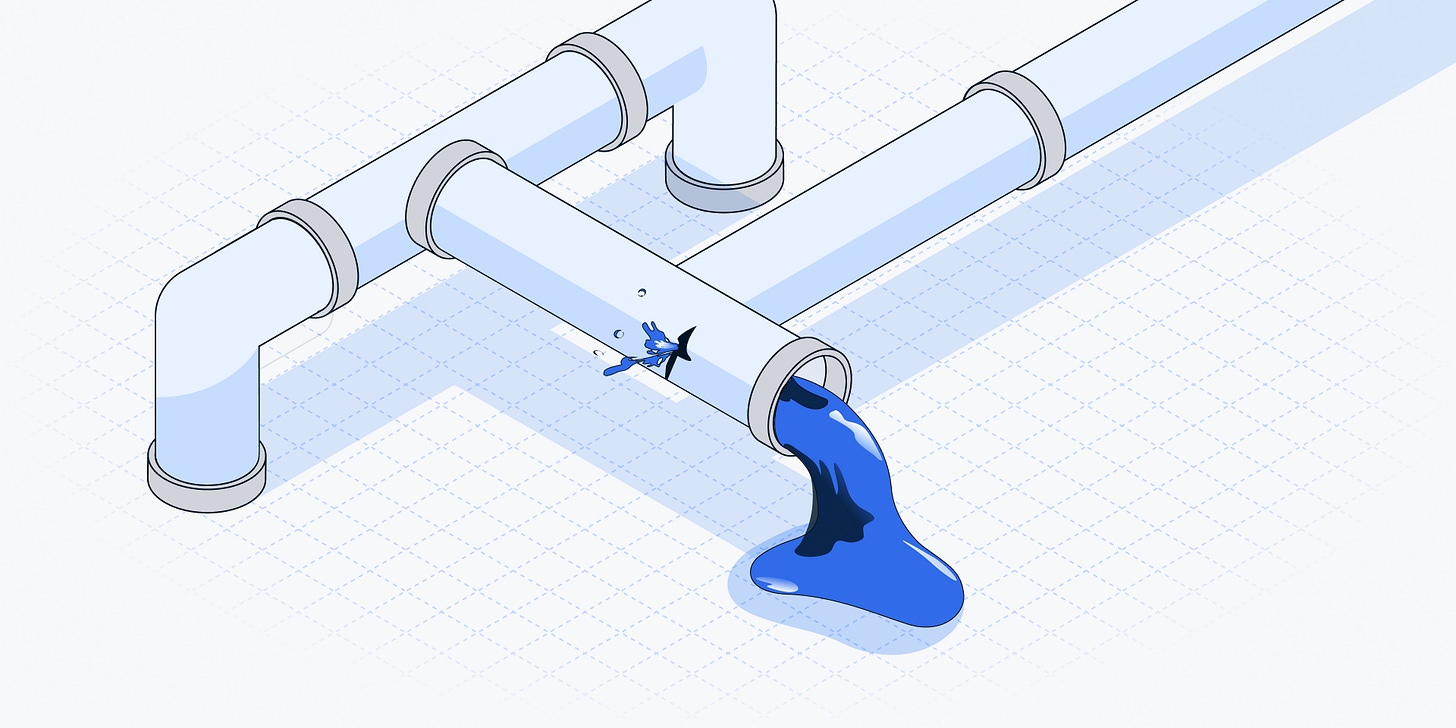

At its core, data engineering is about building and maintaining the pipelines that move data efficiently and reliably. But it’s not just about movement—data engineers ensure that data is clean, structured, and stored in ways that make sense for those who need to use it.

Think of it as running a supply chain for data:

Extracting data from various sources (databases, APIs, IoT sensors, logs, real-time streams).

Cleaning, transforming, and structuring it to remove inconsistencies, errors, and duplicates.

Storing it efficiently in databases, data warehouses, or data lakes.

Making it accessible for analysts, machine learning models, and applications that rely on it.

If data is the new oil, then data engineers are the refinery workers—they take raw, unprocessed data and turn it into something valuable. Without them, businesses would be stuck with crude, unusable data.

Data Engineering vs. Data Science vs. ML Engineering

A lot of confusion exists between these roles, so let’s break it down with an analogy:

Data Engineers are the plumbers – They build and maintain the pipelines that transport clean, usable data.

Data Scientists are the chefs – They take the processed data and cook up insights, using statistics and machine learning to generate predictions.

Machine Learning Engineers are the robotics engineers – They use this refined data to train and deploy AI systems that make real-time decisions.

Without plumbers, chefs wouldn’t have clean water, and robotics engineers wouldn’t have the power supply needed to run machines. Similarly, without data engineers, data scientists and ML engineers would struggle with unreliable, incomplete, or inaccessible data.

A Common Misconception: “Can’t We Just Use Raw Data?”

You might wonder—why go through all this effort? Can’t we just analyze the raw data as it is?

Well, here’s the problem: raw data is messy, inconsistent, and often full of errors. Imagine trying to cook with rotten vegetables, unmeasured spices, and random ingredients thrown together—that’s what working with raw data feels like.

Here are a few examples of what happens when data isn’t properly engineered:

An e-commerce company trying to recommend products gets duplicate, conflicting customer profiles due to bad data integration.

A financial institution miscalculates risk because transaction data from different systems doesn’t match up.

A self-driving car AI fails because sensor data wasn’t cleaned and structured properly before being used in training models.

In short, without data engineering, businesses make decisions based on incomplete, inconsistent, or even flat-out wrong data—which can be catastrophic.

So the next time you see a personalized recommendation, an AI making a smart prediction, or a real-time analytics dashboard, remember: there’s a data engineer behind the scenes, ensuring everything runs smoothly.

Why Data Engineering Matters – The Hidden Force Behind AI & Analytics

You know those eerily accurate Netflix recommendations? The way Uber predicts your fare before you even confirm a ride? Or how banks catch fraudulent transactions before they drain your account?

That’s data engineering at work—silently powering the AI models and analytics that shape our digital world.

But here’s the thing: without data engineering, even the most advanced AI models are useless. Why? Because raw data, in its natural state, is a chaotic, inconsistent mess.

Raw Data is Useless – Here’s Why

Imagine you’re handed a pile of random ingredients—some expired, some mislabeled, and some missing completely—and told to cook a gourmet meal. That’s what data scientists and AI models deal with when they receive raw, unprocessed data.

Companies generate data from countless sources—social media, customer transactions, IoT sensors, medical records—but this data is often:

Incomplete – Missing values, incorrect formats, or unstructured text that’s hard to interpret.

Redundant – Duplicate records, conflicting entries, and inconsistent timestamps.

Scattered – Data spread across different systems, making it hard to consolidate.

Slow to Process – Huge datasets with no proper indexing or optimization, leading to bottlenecks.

Let’s take a real-world example:

Imagine NASA trying to land a spacecraft on Mars. If the telemetry data from different sensors isn’t processed, cleaned, and synchronized, the landing calculations could be off by a few milliseconds—enough to crash the entire mission.

The same principle applies to businesses:

If an AI model predicting customer behavior gets incomplete purchase history, it might assume a loyal customer has abandoned the brand.

If an analytics dashboard at a hospital gets delayed patient data, doctors might miss critical signs of deterioration.

If a bank’s fraud detection system gets redundant transaction logs, it might fail to detect real fraud in time.

Without data engineering, AI models make flawed predictions, analytics dashboards display incorrect insights, and businesses make poor decisions—sometimes with devastating consequences.

Real-World Data Engineering in Action

To truly understand its importance, let’s look at how data engineering quietly powers some of the biggest tech companies:

Uber: The Data Engine Behind Every Ride

Ever wondered how Uber finds a driver in seconds and predicts your fare instantly?

Uber processes millions of location updates per second from drivers and riders worldwide.

Data engineers build real-time streaming pipelines that match riders with drivers within milliseconds.

They optimize pricing models by analyzing demand surges, traffic conditions, and user behavior.

Without real-time data pipelines, Uber’s app would struggle to keep up, resulting in long wait times and chaotic pricing.

Netflix: How Data Engineering Fuels Your Next Binge

Why does Netflix always seem to know what you want to watch next?

Netflix collects billions of viewing logs daily—what you watch, when you pause, what you skip.

Data engineers design scalable data lakes that store this information efficiently.

Machine learning models use processed, structured data to personalize recommendations.

If Netflix relied on raw, unprocessed data, recommendations would be inaccurate, making the platform frustrating rather than addictive.

Banks & Fraud Detection: Stopping Cybercriminals in Real-Time

A credit card fraud attempt happens every 15 seconds. How do banks stop it?

Data engineers build pipelines that process millions of transactions per second.

AI models flag unusual patterns—like a card being used in two countries at the same time.

Systems trigger real-time alerts, preventing fraudulent transactions before they complete.

Without fast, structured, and reliable data, banks would detect fraud too late, leading to massive financial losses.

From ride-hailing to fraud prevention, data engineering is the invisible backbone of modern AI and analytics.

It ensures that data isn’t just collected—it’s cleaned, structured, stored, and made accessible in real-time so that businesses can make informed decisions.

So the next time you get a perfect Netflix recommendation or an instant Uber fare estimate, remember: there’s a team of data engineers working behind the scenes, making it all possible.

The Building Blocks of Data Engineering

Data engineering is like building a high-speed railway system for data. Just as trains need tracks, stations, and schedules to move efficiently, data needs pipelines, storage, and governance to flow seamlessly.

Without a solid foundation, data engineering systems collapse under the weight of inconsistent formats, broken pipelines, and slow processing times. Let's break down the key components that make data engineering work like a well-oiled machine.

1. Data Pipelines – The Backbone of Data Engineering

A data pipeline ensures that information flows smoothly from its source to its destination. Think of it as a coffee supply chain: raw beans (data) are sourced, transported, processed, and finally served as a refined product. A well-designed pipeline eliminates bottlenecks, maintains quality, and ensures timely delivery.

There are two main types of data pipelines:

Batch Processing Pipelines handle large volumes of data at scheduled intervals. These are ideal for tasks like generating daily sales reports or updating customer records.

Streaming Pipelines process data in real time as events occur. Systems like fraud detection, ride-hailing apps, and stock market platforms rely on streaming to make instant decisions.

For instance, when a customer swipes a credit card, a streaming pipeline immediately checks for fraudulent activity, while a batch pipeline aggregates all transactions at the end of the day for financial reporting.

2. ETL vs. ELT – Two Approaches to Data Processing

Before data can be analyzed, it must be cleaned and structured. This is where ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) come in.

ETL extracts raw data, transforms it into a clean, structured format, and then loads it into a data warehouse. This method works best for structured data, where processing needs to happen before storage.

ELT extracts raw data, loads it directly into storage, and transforms it later as needed. This approach is more flexible and suited for big data environments, where unstructured data is stored first and processed on demand.

A simple analogy: ETL is like brewing coffee before serving it, ensuring that every cup is perfectly refined. ELT is like delivering raw beans to a café and letting baristas prepare them based on customer preferences.

Data Storage – Choosing the Right Home for Your Data

Not all data is structured the same way, and the right storage solution depends on how the data will be used.

Data Warehouses are designed for structured, high-speed querying. They function like well-organized libraries where everything is indexed and easy to find.

Data Lakes store raw, unstructured data. Think of them as massive digital attics where all kinds of information—logs, images, and sensor data—are dumped and later processed as needed.

Lakehouses blend the strengths of both, offering the structured querying of a warehouse with the flexibility of a lake.

For example, an e-commerce company might use a data warehouse to generate revenue reports, while a data lake holds customer behavior logs that data scientists analyze for personalization. A lakehouse enables both structured business intelligence and AI-powered analytics in one system.

3. Data Modeling – Structuring the Chaos

Raw data is often messy—filled with duplicates, missing values, and inconsistencies. Data modeling provides a structured framework to organize it efficiently.

Star Schema is simple and optimized for fast queries, making it ideal for analytics in data warehouses.

Snowflake Schema normalizes data further, reducing redundancy and improving storage efficiency.

Data Vault is designed for long-term scalability, storing historical data in a way that adapts to changes over time.

Imagine a star schema as a restaurant menu—clear, categorized, and easy to navigate. A snowflake schema is like a family tree, breaking down information into detailed relationships.

4. Data Governance & Quality – Ensuring Trustworthy Data

Poor-quality data leads to bad decisions. Data governance establishes policies to keep data accurate, secure, and compliant. Key elements include:

Data Lineage tracks where data comes from and how it changes over time, ensuring transparency.

Data Quality Checks detect inconsistencies, missing values, and duplicates.

Security & Compliance safeguards sensitive information, ensuring adherence to regulations like GDPR and HIPAA.

For instance, banks use data lineage to track financial transactions and detect fraud, while healthcare providers enforce compliance rules to protect patient records.

5. Orchestration – Automating Data Workflows

Manually managing complex data pipelines is impractical. Orchestration tools automate workflows, ensuring smooth execution from data extraction to storage.

Apache Airflow schedules and monitors workflows, providing visibility into data movement.

Prefect offers a Python-native approach, making it easier for engineers to build and manage pipelines.

Dagster provides fine-grained control over dependencies and execution logic.

A retail company might use Airflow to update sales dashboards daily without requiring human intervention, ensuring timely insights.

6. Data Streaming – Handling Real-Time Information

Many applications require real-time data processing. Streaming platforms enable instant data flow and decision-making.

Apache Kafka powers event-driven applications, handling massive volumes of messages.

Apache Flink provides distributed stream processing with low latency.

Google Pub/Sub and AWS Kinesis offer cloud-native alternatives for real-time messaging.

For example, ride-hailing apps rely on Kafka to match drivers and riders in real time, ensuring seamless user experiences.

7. Infrastructure – Cloud vs. On-Premise

Where data is stored and processed depends on business needs.

Cloud Platforms (AWS, GCP, Azure) provide scalability, flexibility, and cost-effectiveness.

On-Premise Solutions offer greater security and control, making them ideal for industries like finance and government.

Hybrid Approaches combine both, keeping sensitive data on-premise while leveraging the cloud for analytics.

A startup might use Google BigQuery for easy, serverless analytics, while a government agency stores classified data on-premise for security reasons.

8. Bringing It All Together – A Day in the Life of a Data Engineer

Imagine you're designing a fraud detection system for a bank. Here’s how all these components come into play:

Data Streaming: Apache Kafka captures incoming transactions in real time.

Orchestration: Apache Airflow triggers an ETL job to clean and structure the data.

Data Storage: Processed transactions are stored in a data lakehouse (Databricks/BigQuery).

AI Models: Machine learning algorithms analyze the data to detect fraudulent activity.

Data Governance: Compliance tools ensure regulatory standards are met.

If a fraudulent transaction is detected, an alert is triggered within milliseconds—preventing financial loss before it happens.

Data engineering isn’t just about moving data from point A to point B—it’s about ensuring reliability, efficiency, and trust. Without clean and structured data, AI models produce poor predictions, analytics dashboards display incorrect insights, and businesses make costly mistakes.

Every real-time recommendation, fraud alert, or stock market update you see is powered by a data engineering system working tirelessly behind the scenes.

That’s the power of data engineering.

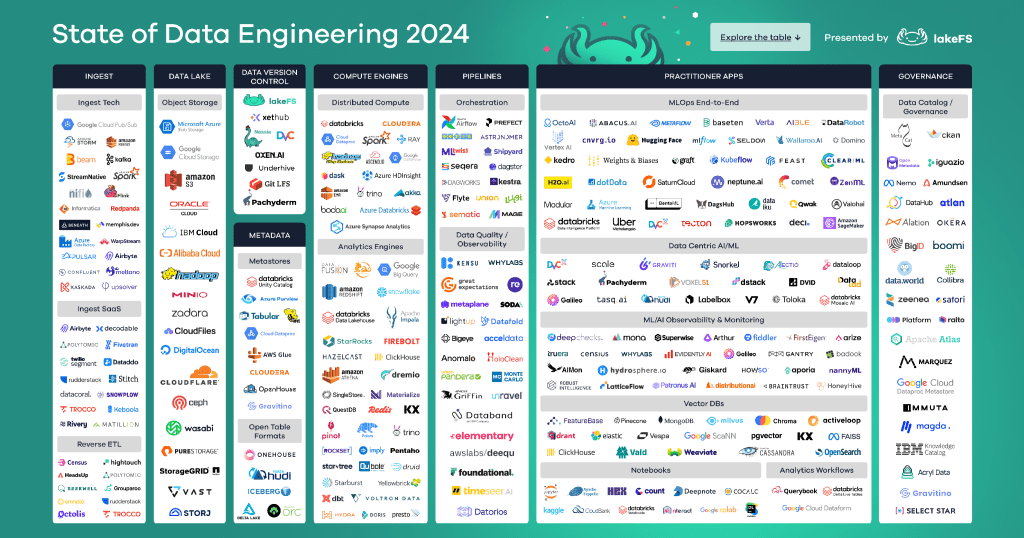

Tools & Technologies Every Data Engineer Uses

Data engineering relies on a robust ecosystem of tools that ensure efficient data collection, processing, storage, and automation. Whether dealing with structured databases, big data frameworks, or real-time streaming, data engineers must be proficient in a diverse set of technologies.

1. Programming Languages & Frameworks – The Core of Data Engineering

A data engineer’s job is to transform raw data into actionable insights, and this requires strong programming skills.

SQL – The Backbone of Data Manipulation

SQL (Structured Query Language) is fundamental for querying, transforming, and managing relational databases. Almost every company depends on SQL to retrieve and process structured data efficiently. Common SQL-based systems include PostgreSQL, MySQL, SQL Server, and Snowflake.Python – The Versatile Workhorse

Python dominates data engineering due to its readability, vast ecosystem of libraries, and compatibility with various data processing frameworks. Libraries like Pandas, NumPy, PySpark, and Dask make Python ideal for handling data pipelines, automation, and integration with machine learning models.Scala – Optimized for Big Data Processing

While Python is widely used, Scala is preferred for large-scale distributed data processing, particularly with Apache Spark. It offers better concurrency and memory management, making it more efficient for working with massive datasets.Java – Powering Enterprise Data Systems

Many enterprise data platforms, such as Apache Hadoop and Apache Kafka, are built using Java. Data engineers working with high-performance, scalable data solutions often use Java to interact with these frameworks.Go – Performance-Driven Data Engineering

The rise of real-time data systems has increased the demand for Go (Golang), particularly in streaming architectures and cloud-native data applications. Tools like InfluxDB and Kubernetes leverage Go for their high-performance capabilities.

Each language has its niche: SQL is indispensable for querying, Python is ideal for flexibility, Scala is optimized for big data, Java is dominant in enterprise solutions, and Go is growing in cloud-based data engineering.

2. Cloud Platforms & Infrastructure – Scaling Data Workflows

Modern data engineering requires scalable, fault-tolerant, and efficient cloud infrastructure. The three dominant cloud platforms are:

Amazon Web Services (AWS) – Offers a vast range of services for data engineering, including S3 (storage), Redshift (data warehouse), Glue (ETL), EMR (big data processing), and Lambda (serverless computing).

Google Cloud Platform (GCP) – Known for its powerful data analytics tools like BigQuery (serverless data warehouse), Dataflow (stream processing), and Cloud Composer (workflow orchestration with Airflow).

Microsoft Azure – Provides enterprise-grade data solutions such as Azure Synapse Analytics (data warehouse), Data Factory (ETL), and ADLS (data storage).

Each platform has strengths: AWS is the most widely used, GCP is dominant in analytics and machine learning, and Azure integrates seamlessly with Microsoft products.

3. Orchestration & Workflow Automation – Managing Data Pipelines

Handling large-scale data processing manually is inefficient. Workflow orchestration tools automate pipeline execution, monitor dependencies, and ensure reliability.

Apache Airflow – The Industry Standard for Workflow Orchestration

Airflow enables scheduling, monitoring, and managing ETL jobs by defining Directed Acyclic Graphs (DAGs). It supports integration with multiple cloud platforms, databases, and data processing tools.Prefect – A More Pythonic Alternative to Airflow

Prefect simplifies data workflows with a Python-native approach, offering better exception handling, logging, and dependency management.Dagster – Pipeline-First Orchestration

Dagster provides a structured way to define data pipelines with built-in support for testing, monitoring, and debugging.

Choosing the right orchestration tool depends on the complexity of workflows, scalability requirements, and cloud or on-premise infrastructure preferences.

4. Big Data Frameworks – Processing Massive Datasets

Large-scale data requires specialized frameworks to handle distributed storage and computation.

Apache Spark – The De Facto Big Data Processing Engine

Spark supports distributed computing for large-scale data transformations. It integrates with Scala, Python (PySpark), and Java and is widely used in data engineering workflows.Hadoop – Batch Processing for Large Datasets

Hadoop’s MapReduce framework allows batch processing of vast amounts of data stored in HDFS (Hadoop Distributed File System). While Spark is often preferred due to faster performance, Hadoop remains relevant in legacy systems.Dask – Scalable Parallel Computing in Python

Unlike Spark, Dask operates seamlessly within a Python environment, enabling parallel computation without the overhead of a big data cluster.

Each of these frameworks is designed for distributed computing, allowing engineers to work with petabytes of data across multiple nodes efficiently.

5. Data Storage & Warehousing – Managing Structured and Unstructured Data

Choosing the right data storage solution depends on use cases, scalability, and query performance.

Data Warehouses – Optimized for Structured Analytics

Data warehouses store structured, relational data optimized for analytical queries. Popular solutions include Snowflake, Amazon Redshift, Google BigQuery, and Azure Synapse Analytics.Data Lakes – Handling Raw, Unstructured Data

Data lakes store large amounts of raw data in various formats, including structured, semi-structured, and unstructured data. Common platforms include AWS S3, Azure Data Lake, and Google Cloud Storage.Lakehouses – Combining the Best of Both Worlds

Lakehouses integrate the structure of data warehouses with the flexibility of data lakes. Databricks and Delta Lake are leading technologies in this space, enabling real-time analytics on structured and semi-structured data.

6. Real-Time Data Streaming – Processing Live Data Feeds

Not all data can be processed in batches. Many use cases require real-time processing to handle continuous data streams.

Apache Kafka – The Leader in Event Streaming

Kafka enables high-throughput, distributed event streaming for real-time analytics and messaging systems. It is widely used in financial transactions, log processing, and event-driven architectures.Apache Flink – Real-Time Data Processing at Scale

Flink is optimized for low-latency stream processing and is commonly used in fraud detection, machine learning, and IoT analytics.Google Pub/Sub and AWS Kinesis – Cloud-Native Streaming Solutions

These managed services provide seamless real-time data streaming for cloud applications, reducing operational overhead.

Real-time streaming is crucial for applications like fraud detection, recommendation engines, and live monitoring dashboards.

7. Data Governance & Security – Ensuring Reliability and Compliance

With the increasing volume of data, ensuring data quality, security, and compliance is critical.

Data Lineage – Tracking Data Movement

Tools like Apache Atlas and Alation help track data origin, transformations, and usage across pipelines.Data Quality & Observability

Frameworks such as Great Expectations ensure that data meets predefined quality standards before being processed.Security & Compliance – Protecting Sensitive Information

Compliance with regulations such as GDPR, HIPAA, and SOC 2 requires robust security measures, including data encryption, access controls, and audit logs.

Data governance frameworks ensure that businesses can trust their data, avoid compliance risks, and maintain data integrity.

Mastering data engineering requires expertise across programming, big data frameworks, cloud platforms, workflow automation, and data governance. These tools form the backbone of modern data ecosystems, enabling companies to process, store, and analyze vast amounts of data efficiently. As data continues to grow in scale and complexity, the demand for skilled data engineers who understand these technologies will only increase.

Breaking Into Data Engineering – A Roadmap for Beginners

Becoming a data engineer requires a combination of technical skills, hands-on experience, and an understanding of how data flows through an organization. Here’s a structured roadmap to help you get started.

Step 1: Master the Core Skills

Before diving into complex architectures and large-scale data processing, it’s essential to build a strong foundation in the fundamental concepts and technologies that power data engineering.

1. Learn SQL – The Grammar of Data

SQL (Structured Query Language) is the most critical skill for any data engineer. It is used to query, transform, and manipulate structured data in relational databases.

Start with basic SQL operations: SELECT, INSERT, UPDATE, DELETE

Learn how to join tables using INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL JOIN

Practice writing complex queries involving GROUP BY, HAVING, and WINDOW FUNCTIONS

Understand indexing and optimization techniques to improve query performance

Resources to explore: PostgreSQL, MySQL, SQL Server, Snowflake, and BigQuery.

2. Understand ETL Pipelines – The Backbone of Data Engineering

ETL (Extract, Transform, Load) is a fundamental concept in data engineering. It involves collecting data from different sources, transforming it into a usable format, and loading it into a database or data warehouse.

Learn the key components of ETL: data extraction, data transformation, and data loading

Work with tools like Apache Airflow, Apache NiFi, dbt, or Luigi

Understand data validation, schema evolution, and error handling in ETL pipelines

Start by building simple ETL projects:

Extract data from an API or CSV file

Transform data using Python and Pandas

Load data into a PostgreSQL or MySQL database

3. Get Comfortable with Cloud Platforms

Cloud platforms are essential in modern data engineering. Most companies rely on cloud-based infrastructure for scalability and cost efficiency.

Amazon Web Services (AWS) – Learn services like S3 (storage), Redshift (data warehouse), Glue (ETL), and Lambda (serverless computing)

Google Cloud Platform (GCP) – Explore BigQuery, Dataflow, and Cloud Storage

Microsoft Azure – Get familiar with Azure Data Lake, Azure Synapse Analytics, and Data Factory

Start with free-tier cloud accounts and practice setting up storage, running SQL queries, and orchestrating basic ETL jobs.

Step 2: Learn by Doing

Theory alone won’t make you a data engineer. You need to apply your knowledge by building real-world projects that showcase your skills.

1. Build an ETL Pipeline Using Python & SQL

A strong portfolio project is an end-to-end ETL pipeline that collects, processes, and stores data.

Extract data from a public API (e.g., weather data, stock market data)

Transform data using Pandas, PySpark, or SQL queries

Load the processed data into PostgreSQL, MySQL, or Snowflake

Schedule and automate the pipeline using Apache Airflow or Prefect

Bonus: Deploy your ETL pipeline on AWS Lambda or GCP Cloud Functions.

2. Create a Data Warehouse Project with PostgreSQL

A well-designed data warehouse helps organizations analyze historical data efficiently.

Step 1: Design a Star Schema or Snowflake Schema for structured data storage

Step 2: Load transactional data into PostgreSQL using ETL tools

Step 3: Optimize queries with indexing and partitioning

Step 4: Run analytical queries to generate business insights

Try using dbt (data build tool) to transform raw data into meaningful business metrics.

3. Process Streaming Data Using Kafka

Streaming data processing is crucial for handling real-time applications like fraud detection, social media analytics, and live dashboards.

Set up Apache Kafka to stream real-time data

Produce and consume messages using Python (Kafka-Python) or Java

Integrate Kafka with Apache Spark Streaming or Flink for real-time analytics

Store processed data in a data lake (AWS S3, GCP Cloud Storage) or a NoSQL database (Cassandra, MongoDB)

Real-world use case: Build a Twitter sentiment analysis pipeline where tweets are streamed via Kafka, processed in real time, and visualized in a dashboard

Next Steps: Advancing Your Data Engineering Career

Once you’ve built foundational skills and hands-on projects, the next step is to deepen your expertise:

Learn about data modeling and schema design

Master workflow orchestration with Apache Airflow

Explore big data frameworks like Spark and Hadoop

Gain experience with infrastructure as code (IaC) using Terraform

Understand CI/CD for data pipelines with Docker and Kubernetes

Work on end-to-end data engineering projects that involve data ingestion, transformation, and visualization

Breaking into data engineering requires dedication, structured learning, and hands-on experience. By mastering SQL, building ETL pipelines, working with cloud platforms, and tackling real-world projects, you’ll be well on your way to becoming a data engineer. The key is consistency—keep learning, keep experimenting, and refine your skills through practical application.

We’ve explored the foundations of data engineering and why it serves as the backbone of AI, analytics, and modern business intelligence. From mastering SQL to building scalable ETL pipelines, data engineers play a crucial role in turning raw information into actionable insights.

But understanding the fundamentals is just the beginning. How does raw data actually transform into structured, usable insights? What are the key challenges in data ingestion, transformation, and storage?

In our next issue of The Data Journey: Raw Data to Usable Insights, we’ll break down the entire data lifecycle—covering ingestion, transformation, storage, and analytics with real-world examples and best practices.

Stay tuned as we continue this journey into the world of data engineering!

Want to go deeper? Subscribe to stay updated and never miss an issue.

Have questions or thoughts? Drop them in the comments—I’d love to hear your perspective.

If you found this valuable, share it with others who are curious about data engineering. Let’s build a community of data enthusiasts together.

See you in the next edition of The Data Journey: Raw Data to Usable Insights.